In this new era, the functions behind robot intelligence are also more high-tech than you think.

Alan Mathison turing, the pioneer of computer science and cryptography, wrote the article "Computer and Intelligence" in 1950 and proposed a classic test: if a machine talks to humans, and more than 30% of the tests show that humans wrongly think that they are talking to humans rather than machines, then the machine can be said to be intelligent. This is the well-known "Turing test" in the field of artificial intelligence. Turing predicted that computers would pass this test at the end of the 20th century. In fact, it was not until 2014 that the artificial intelligence software Eugene Gustmann passed the Turing test for the first time. This also reflects a fact from the side: although scientists have made a guess more than 70 years ago, there is still a long way to go to endow robots with "souls". So here comes the question.... At present, artificial intelligence is in a stage of relatively rapid development. What can we do to let robots interact with us?

Loading

Let robots "see" the world as the smartest creatures on earth. 83% of the channels for human to obtain information come from vision, 11% from hearing, 3.5% from smell, 1.5% from touch, and 1% from taste. Since it is necessary to simulate human thinking mode, its core is to let the machine make corresponding feedback according to the collected data information through deep learning. Considering that most of our information comes from vision,

Loading

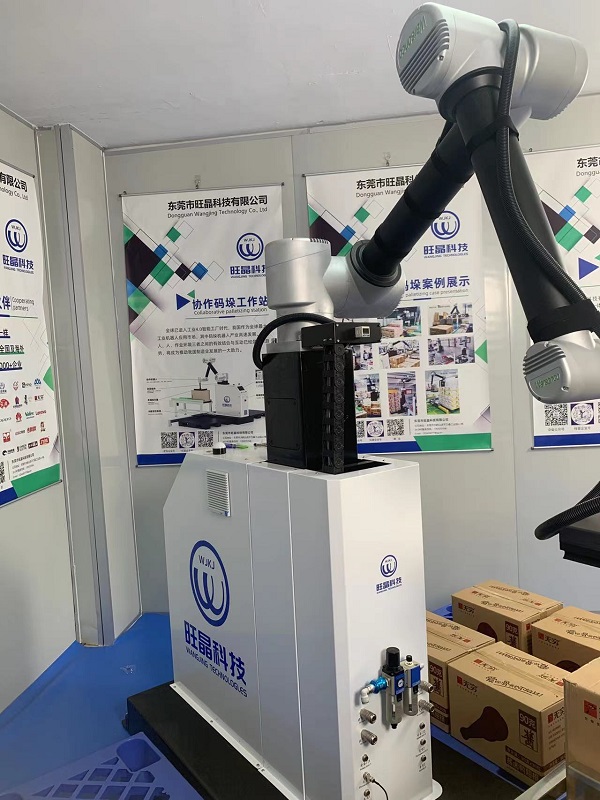

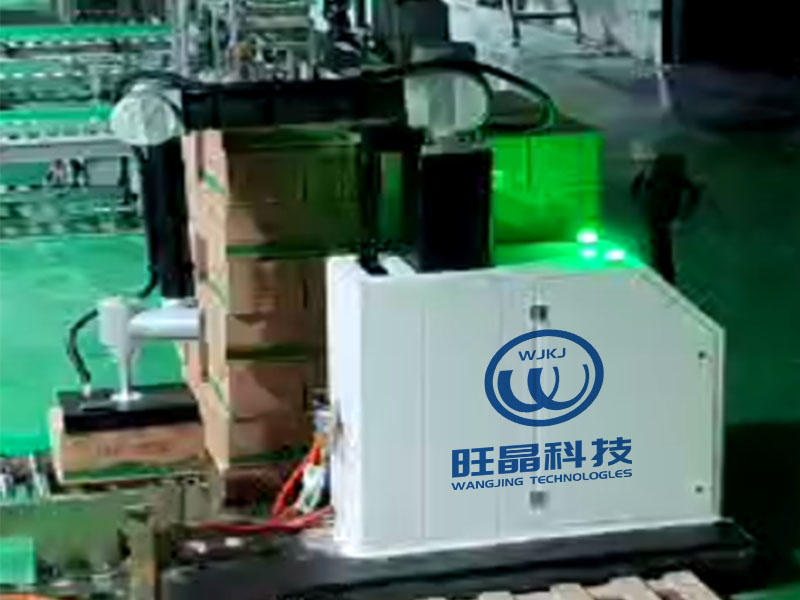

The most common robot in the factory also has a wealth of sensory sensors. At present, with the continuous evolution of artificial intelligence vision technology, including object recognition, target tracking, navigation and obstacle avoidance, it has become the front-end common technology of all kinds of intelligent devices. We can find many cases in industrial production automation, assembly line control, automatic driving, security monitoring, remote sensing image analysis, unmanned aerial vehicles, agricultural production, robots and other fields. For example, the nucleic acid sampling robot developed by Shanghai Institute of Artificial Intelligence uses visual sensors to identify whether the position and mouth opening action of the face meet the sampling requirements. After the mechanical arm extends the cotton swab into the mouth, the endoscope vision system is used to detect the oral environment, identify the tonsils and guide the cotton swab to collect secretions near the tonsils. The force control sensor can feed back the force control data in real time, control the force of the mechanical arm within the safety threshold, and complete an unmanned nucleic acid sampling in about 22 seconds. This is a typical automatic robot relying on environment awareness.

Combined with visual and tactile sensors, nucleic acid sampling robot can complete unmanned sampling task. For mobile robots, it is necessary to use various sensors to realize environment awareness. For example, you may see automatic food delivery robots in restaurants, or transportation robots common in factories. They can "identify" the environment and build maps by equipping lidar, stereo camera, infrared and ultra wideband sensors, so they have the ability to recognize, perceive, understand, judge and act. The ability to perceive the environment is the most basic function of robots, which means that such robots are more suitable for service-oriented work. At present, the robot can also monitor the abnormal behavior of people, detect and record people, alarm for abnormal high temperature or fire, and alarm for abnormal environmental data. Through modular equipment, or even through remote monitoring modules, they can replace people to enter dangerous places and complete survey tasks. Let the robot "talk". If only through environmental awareness to complete the work, such a robot "smart"? From the human point of view, they are all automatic tools, and there is a very obvious gap between them and the intelligent robots we imagined in the film. In fact, the reason for this feeling is largely because most service robots cannot interact with people, and the core way of our human interaction is talking and chatting. The Turing test proposed more than 70 years ago is still verified in written form. Now, if you want to redefine, voice interaction should be a required option. Bill Gates once said that "the cognitive habits and communication forms between human and nature must be the development direction of human-computer interaction".

The seemingly simple dialogue contains many analysis steps. Human computer interaction technology mainly includes speech recognition, semantic understanding, face recognition, image recognition, body sense/gesture interaction, etc. Among them, the process of voice human-computer interaction includes information input and output, voice processing, semantic analysis, intelligent logic processing, and the integration of knowledge and content. At present, AI speech technology can be divided into near field speech and far field speech. Near field voice is basically used to meet some auxiliary needs. For example, are Apple Siri and Microsoft Xiaobing near-field voice products? Many smart speakers can realize far-field voice, and users can control smart home devices through voice commands from 5 meters away. These seemingly simple tasks actually require high accuracy. From the perspective of processing, we should first process our voice and the surrounding environment through acoustics, then translate the voice we hear into words through speech recognition technology, and then analyze the meaning of these words through semantic understanding technology. Finally, the machine executes the user's instructions or synthesizes the content to be expressed into speech through speech synthesis technology.

The semantic understanding ability of robots is still at a low level. However, in the real environment, due to noise and other environmental factors, the machine still cannot completely and accurately recognize natural language. When the machine translates the heard voice into words, the accent, accent and grammar will affect the success rate. Moreover, human language is too complex, which is affected by word boundary ambiguity, polysemy, syntactic ambiguity and context understanding. In addition, there are a large number of dialects in Chinese, so semantic understanding is a huge obstacle. Therefore, the current AI voice system is more used in vertical use scenarios, such as car intelligent voice system, children's entertainment and education software, AI customer service, etc. Especially for AI customer service, many people should have received intelligent customer service calls from banks or financial institutions. In most cases, its performance is not much different from that of real people, but it is seriously lack of flexibility and can only communicate within a relatively narrow range, with low accuracy. But on the one hand, it can realize rapid response to customer needs, on the other hand, it can also save time and labor costs to a certain extent, so it will continue to evolve with the deepening of penetration in the future. Let robots become smarter Since we have talked about the intelligent evolution of robots, some readers may ask: How did it evolve? The most widely known method is deep learning. As early as 2011, researchers in one of Google's laboratories extracted 10 million still images from video websites and "fed" them to Google's brain in order to find duplicate images. It took Google Brain three days to complete this challenge. Google Brain is a deep learning model of 1 billion neural units composed of 1000 computers and 16000 processors.

The deep learning concept of robot grasping attitude discrimination comes from the research of artificial neural network. In essence, it is to build a multi hidden layer machine learning architecture model. By training large-scale data, a large number of more representative feature information can be obtained, so as to classify and predict samples and improve the accuracy of classification and prediction. For example, grasping gesture recognition. For human beings, it only takes a few glances to know what gesture to use to get something, but for robots, it is a great challenge. The research contents include intelligent learning, grasping posture discrimination, robot motion planning and control, etc. In addition, you also need to adjust the grasping posture and force according to the material properties of the object being grasped. However, creating a powerful neural network requires more processing layers and powerful data processing capabilities, so deep learning is often backed by upstream hardware giants. In recent years, the rapid development of graphics processors, supercomputers and cloud computing has made deep learning stand out. Nvidia, Intel, AMD and other chip giants have all stood at the center of the AI learning stage. Deep learning techniques are based on a large number of examples. The more data you learn from it, the smarter it becomes. Because big data is indispensable, the current IT giants with large amounts of data, such as Google, Microsoft, Baidu, etc. Do the best in deep learning. At the same time, deep learning technology is superior to traditional machine learning methods in speech recognition, computer vision, language translation and other fields. It even surpasses human recognition ability in face verification and image classification. For example, the popular AI "face changing" in the short video era is to export the face in the original video frame by frame, and then conduct model training through a large number of face photos to be replaced. In the training process, you will intuitively see that the replaced face gradually becomes clear from blurring. According to different computer configurations, after several hours or even dozens of hours of training, you can get a pretty good replacement result. This is a typical process of deep learning.

Pose recognition is also one of the focuses of robot vision learning. For robots, deep learning has many applications in addition to image recognition, such as route planning and indoor navigation in complex environments required by industrial or security robots, and teaching robots to recognize students' posture of sitting, raising hands, and falling. In the future, computing methods may tend to be combined with big data and cloud computing, so that robots can better store resources and learn independently by using cloud platforms. At the same time, in the big data environment, a large number of robots share learning content, overlay learning models, and more effectively analyze and process massive data, thus improving learning and working efficiency and developing the potential of intelligent robots. Of course, there are still many problems hidden in these developments. For example, the combination of robots and cloud platforms, because the technology is not mature enough, resource allocation, system security, reliable and effective communication protocols, and how to break through the technical barriers between major upstream manufacturers are issues that need to be addressed in the next step.